Where will I be at OpenWorld / Oak Table World?

Here’s where I’ll be! If you use Google Calendar you can click on individual entries above and select copy to my calendar - which of course you’ll want to do for all the ones …

Here’s where I’ll be! If you use Google Calendar you can click on individual entries above and select copy to my calendar - which of course you’ll want to do for all the ones …

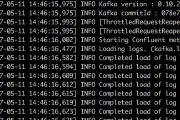

Apache Kafka

Apache Kafka

goldengate

goldengate

conferences

conferences

lsblk

lsblk

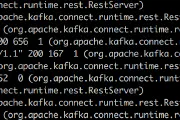

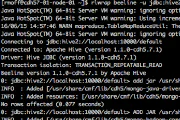

Apache Kafka

Apache Kafka

Apache Kafka

Apache Kafka

vmdk

vmdk

Apache Kafka

Apache Kafka

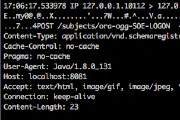

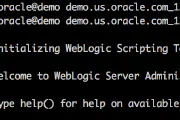

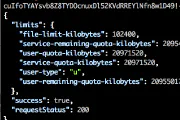

ogg

ogg

Apache Kafka

Apache Kafka

Apache Kafka

Apache Kafka

ogg

ogg

Apache Kafka

Apache Kafka

spark

spark

apache drill

apache drill

mogodb

mogodb

lxc

lxc

edgemax

edgemax

docker

docker

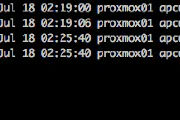

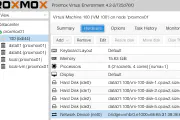

proxmox

proxmox

OBIEE

OBIEE

OBIEE

OBIEE

OBIEE

OBIEE

timelion

timelion

OBIEE

OBIEE

OBIEE

OBIEE

OBIEE

OBIEE